To my understanding, liberalism does value things like that (or at least personal rights and freedoms to some extent, which can include stuff like that). The problem as I see it is that it also includes an overly strong emphasis on personal property rights (for example, one would not expect a liberal government to do something like forcibly nationalize a company, especially without simply buying the shares at market price, because that would be seen as impinging on the rights of the company’s owners).

Now, I don’t object to all personal property, like a person owning the home they live in or something, but if some people own overwhelmingly more than others, that in itself limits the effective rights of others. For example, a person with more money to spend on lawyers is less likely to face justice for crimes than someone else, a person with enough money to buy ads political lobbyists or even entire media platforms has their speech go much further than someone of average wealth, and even for property rights itself, there’s only so much wealth generation to go around and if someone owns a large percentage of it that can’t be owned by someone else, those others people’s work will end up going to enrich that one owner.

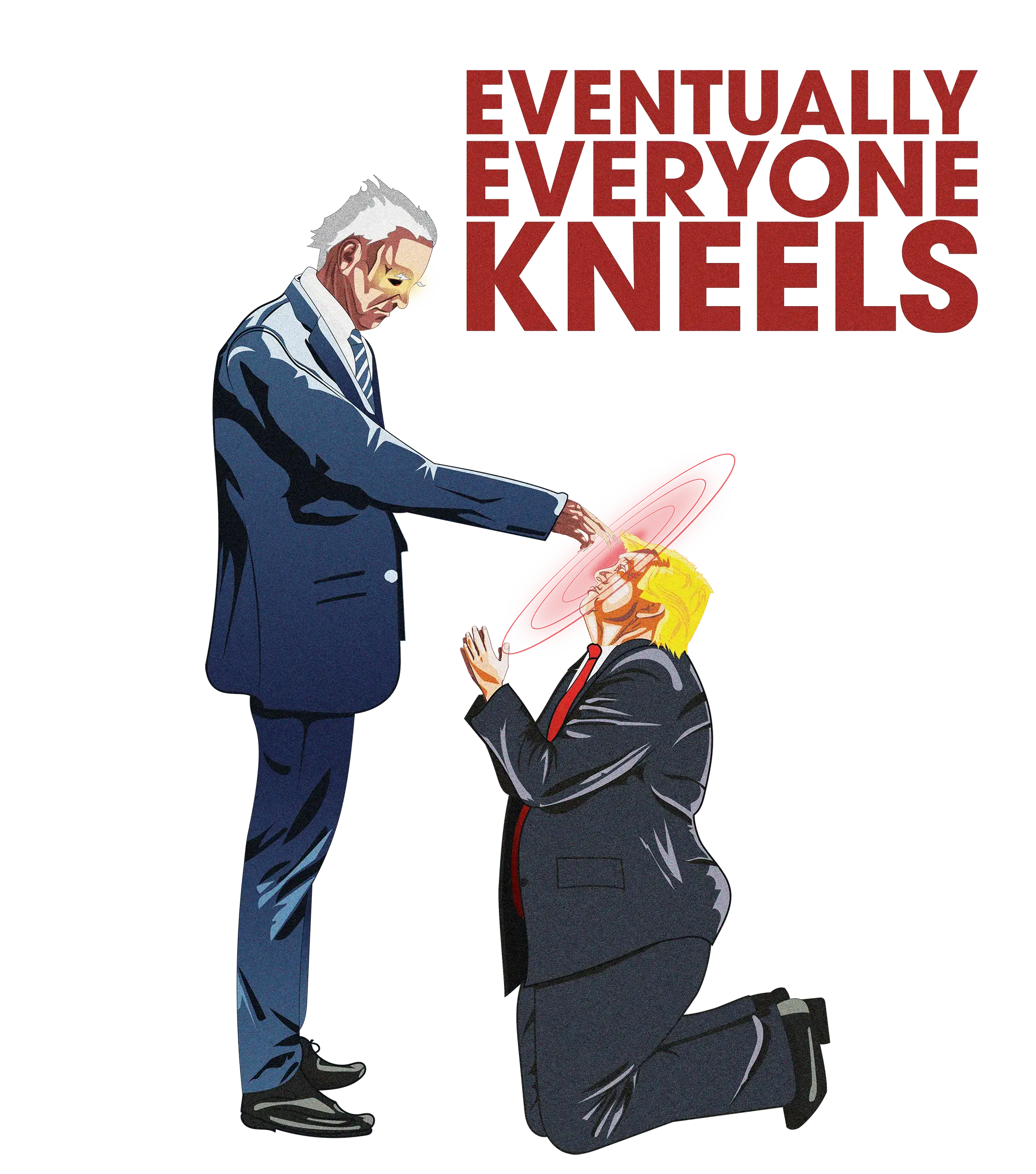

That’s why I find liberalism problematic: it’s generally well intentioned I think but by failing to ensure a relatively even distribution of wealth, the other values it tries to promote are subverted and slip away, until eventually a few people have enough power to seize authoritarian control.

As someone pretty new to linux, what’s wrong with snaps? I’ve seen a lot of memes dunking on them but haven’t run into any issues with the couple that ive tried (even had a problem with a flatpack version of a program that the snap version fixed, though I think it may have been related to an intentional feature of flatpacks rather than a bug).